[ad_1]

![]()

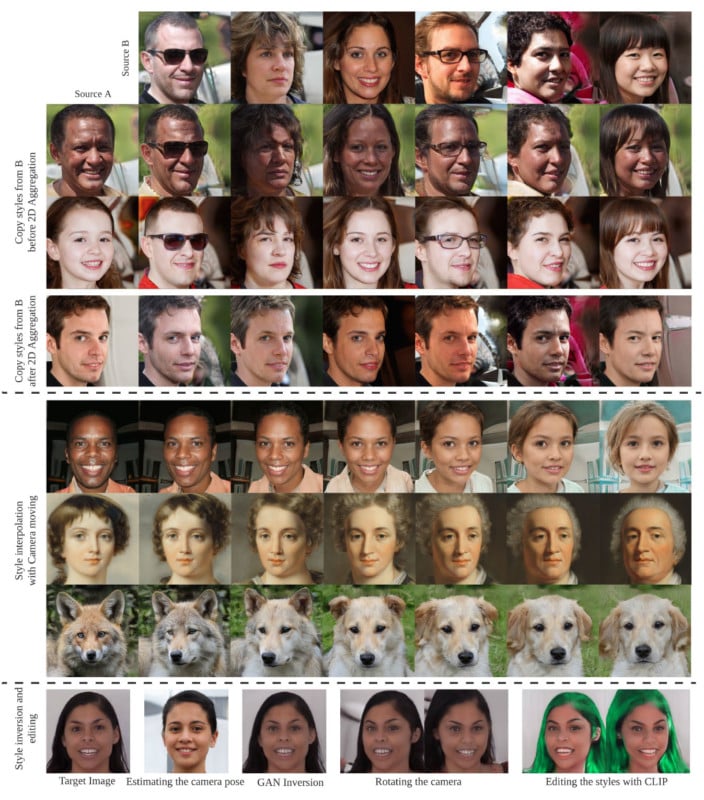

Researchers from the Max Planck Institute for Informatics and the University of Hong Kong have developed StyleNeRF, a 3D-aware generative model that creates high-resolution photorealistic images that can be trained on unstructured 2D images.

DPReview reports that the model is able to synthesize the images with multi-view consistency that, compared to existing approaches that struggle to create high-resolution images with fine details or produce 3D-inconsistent artifacts, StyleNeRF delivers efficient and more consistent images thanks to an integration of its neural radiance field (NeRF) into a style-based generator.

The researchers say that recent works on generative models enforce 3D structures by incorporating NeRF. However, they cannot synthesize high-resolution images with delicate details due to the computationally expensive rendering process of NeRF.

“We perform volume rendering only to produce a low-resolution feature map and progressively apply upsampling in 2D to address the first issue,” the researchers say, referring to the existing method’s difficulty with creating high-resolution images with fine details.

“To mitigate the inconsistencies caused by 2D upsampling, we propose multiple designs, including a better upsampler and a new regularization loss.”

StyleNeRF allows for control of a 3D camera pose and enables control of specific style attributes and incorporates 3D scene representations into a style-based generative model. This method allows StyleNeRF to generalize unseen perspectives of a photo and also supports more challenging tasks like zooming in and out and inversion.

“To prevent the expensive direct color image rendering from the original NeRF approach, we only use NeRF to produce a low-resolution feature map and upsample it progressively to high resolution,” the researchers explain.

“To improve 3D consistency, we propose several designs, including a desirable upsampler that achieves high consistency while mitigating artifacts in the outputs, a novel regularization term that forces the output to match the rendering result of the original NeRF and fixing the issues of view direction condition and noise injection.”

The model is trained using unstructured, real-world images. The progressing training strategy that the team outlines in their full research paper significantly improves the stability of the process.

As DPReview points out, the best visualization of this system is through the real-time demonstration video below. In it, the model is used to mix two images together to create a new one which can be fined tuned quickly and supports angles that aren’t visible in either of the original two input images.

Those who want more details about the StyleNeRF model can read the full research paper.

Image credits: Photos and video by StyleNeRF research team: Jiatao Gu, Lingjie Liu, Peng Wang, and Christian Theobalt and the Max Planck Institute for Informatics and the University of Hong Kong

[ad_2]